Quick Upgrade

Validators perform critical functions for the network, and as such, have a strict uptime requirement. Validators may have to go offline for short periods to upgrade client software or the host machine. Usually, standard client upgrades will only require you to stop the service, replace the binary (or the Docker container) and restart the service. This operation can be executed within a session (4 hours) with minimum downtime. Docker Container For a node running in a Docker container, follow these steps to upgrade your node client version:- Stop and rename the Docker container so it can be re-created again with the latest image.

- Pull the latest Docker image from Github:

- Re-create the validator container again using the latest image:

Info: Re-creating the Docker container will not cause your node to re-sync from block 0 again, as long as your Docker container is setup with correct volume mapping as per the instructions.

- Confirm your node is working correctly and running the latest version on Telemetry, then remove the old container:

Long-lead Upgrade

Validators may also need to perform long-lead maintenance tasks that will span more than one session. Under these circumstances, an active validator may choose to chill their stash, perform the maintenance and request to validate again. Alternatively, the validator may substitute the active validator server with another allowing the former to undergo maintenance activities with zero downtime. This section will provide an option to seamlessly substitute Node A, an active validator server, with Node B, a substitute validator node, to allow for upgrade/maintenance operations for Node A. Step 1: At SessionN

- Follow step 1 and 2 from the Setup Instructions above to setup the new Node B.

- Go to the Portal Extrinsics page and input the values as per the screenshot below, with the session keys value from step 2 of the Setup Instructions.

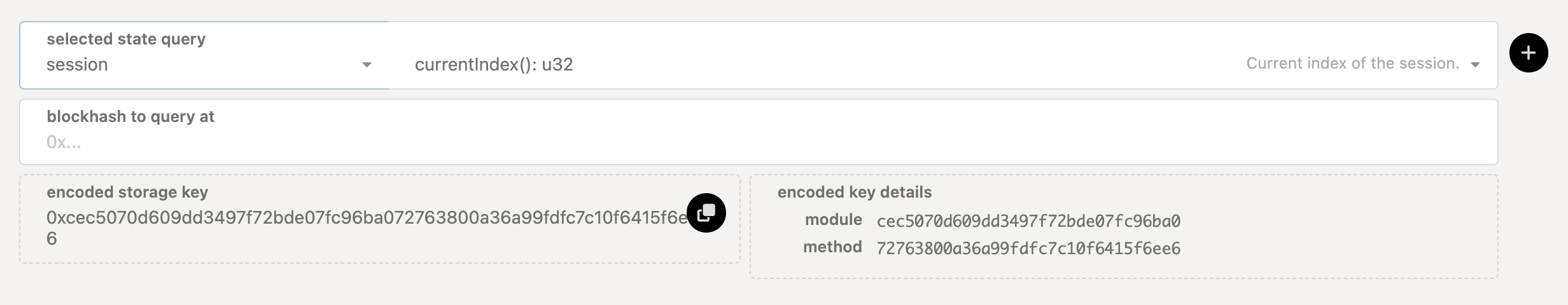

- Go to the the Portal Chain State page, query the

session.currentIndex()to take note of the session that this extrinsic was executed in.

- Allow the current session to elapse and then wait for two full sessions.

Info:

You must keep Node A running during this time.

You must keep Node A running during this time.

session.setKeys does not have an immediate effect and requires two full sessions to elapse before it does. If you do switch off Node A too early you may risk being removed from the active set.N+3

Verify that Node A has changed the authority to Node B by inspecting its log with messages like the ones below: